What's the point of using Docker containers?

Introduction

Originally, operating systems were designed to run a large number of independent processes. In practice, however, dependencies on specific versions of libraries and specific resource requirements for each application process led to using one operating system – and hence one server – per application. For instance, a database server typically only runs a database, while an application server is hosted on another machine.

Compute virtualization solves this problem, but at a price – each application needs a full operating system, leading to high license and systems management cost. And because even the smallest application needs a full operating system, much memory and many CPU cycles are wasted just to get isolation between applications. Container technology is a way to solve this issue.

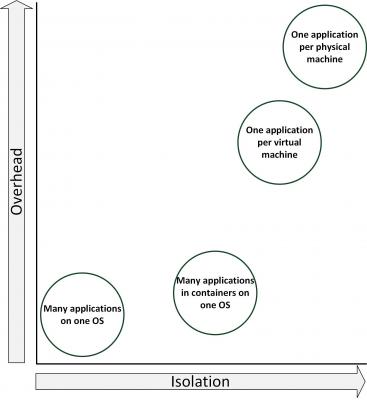

The figure above shows the relation between isolation between applications and the overhead of running the application. While running each application on a dedicated physical machine provides the highest isolation, the overhead is very high. An operating system, on the other hand, provides much less isolation, but at a very low overhead per application.

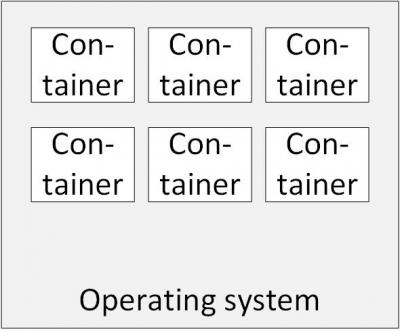

Container technology, also known as operating-system-level virtualization, is a server virtualization method in which the kernel of an operating system provides multiple isolated user-space instances, instead of just one. These containers look and feel like a real server from the point of view of its owners and users, but they share the same operating system kernel. This isolation enables the operating system to run multiple processes, where each process shares nothing but the kernel.

Containers are not new – the first UNIX based containers, introduced in 1979, provided isolation of the root file system via the chroot operation. Solaris subsequently pioneered and explored many enhancements, and Linux control groups (cgroups) adopted many of these ideas.

Containers are part of the Linux kernel since 2008. What is new is the use of containers to encapsulate all application components, such as dependencies and services. And when all dependencies are encapsulated, applications become portable.

Using containers has a number of benefits:

- Isolation – applications or application components can be encapsulated in containers, each operating independently and isolated from each other.

- Portability – since containers typically contain all components the embedded application or application component needs to function, including libraries, patches, containers can be run on any infrastructure that is capable of running containers using the same kernel version.

- Easy deployment – containers allow developers to quickly deploy new software versions, as the containers they define can be moved to production unaltered.

Container technology

Containers are based on 3 technologies that are all part of the Linux kernel:

- Chroot (also known as a jail) - changes the apparent root directory for the current running process and its children and ensures that these processes cannot access files outside the designated directory tree. Chroot was available in Unix as early as 1979.

- Cgroups - limits and isolates the resource usage (CPU, memory, disk I/O, network, etc.) of a collection of processes. Cgroups is part of the Linux kernel since 2008.

- Namespaces - allows complete isolation of an applications' view of the operating environment, including process trees, networking, user IDs and mounted file systems. It is part of the Linux kernel since 2002.

Linux Containers (LXC), introduced in 2008, is a combination of chroot, cgroups, and namespaces, providing isolated environments, called containers.

Docker can use LXC as one of its execution drivers. It adds Union File System (UFS) – a way of combining multiple directories into one that appears to contain their combined contents – to the containers, allowing multiple layers of software to be "stacked". Docker also automates deployment of applications inside containers.

Containers and security

While containers provide some isolation, they still use the same underlying kernel and libraries. Isolation between containers on the same machine is much lower than virtual machine isolation. Virtual machines get isolation from hardware - using specialized CPU instructions. Containers don't have this level of isolation. However, there are some operating systems, like Joyent SmartOS' offering, that run on bare metal, and providing containers with hardware based isolation using the same specialized CPU instructions.

Since developers define the contents of containers, security officers lose control over the containers, which could lead to unnoticed vulnerabilities. This could lead to using multiple versions of tools, unpatched software, outdated software, or unlicensed software. To solve this issue, a repository with predefined and approved container components and container hierarchy can be implemented.

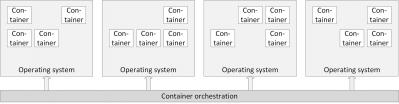

Container orchestration

Where an operating system abstracts resources such as CPU, RAM, and network connectivity and provides services to applications, container orchestration, also known as a datacenter operating system, abstracts the resources of a cluster of machines and provides services to containers. A container orchestrator allows containers to be run anywhere on the cluster of machines – it schedules the containers to any machine that has resources available. It acts like a kernel for the combined resources of an entire datacenter instead of the resources of just a single computer.

There are many frameworks for managing container images and orchestrating the container lifecycle. Some examples are:

- Docker Swarm

- Apache Mesos

- Google's Kubernetes

- Rancher

- Pivotal CloudFoundry

- Mesophere DC/OS

This entry was posted on Wednesday 22 June 2016

Dutch

Dutch